Read and Reap the Rewards

Learning to Play Atari with the Help of Instruction Manuals

Media Coverage: New Scientist, Singularity Hub, National Post

Walkthrough

The trial-and-error process of RL is known to be inefficient. On the other hand, humans learn to perform tasks not only from interaction or demonstrations, but also by reading unstructured text documents, e.g., instruction manuals or Wiki pages.

We propose the Read and Reward framework. Read and Reward speeds up RL algorithms on Atari games by reading Wikipedia articles and manuals released by the Atari game developers.

The QA Extraction module (Read) extracts and summarizes relevant information from the manual.

The Reasoning module (reward), powered by LLM, evaluates object-agent interactions based on information from the manual.

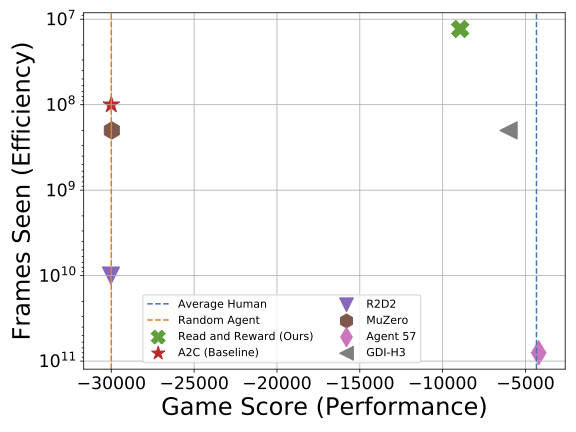

Assisted by the auxiliary rewards, a standard A2C agent (takes greyscale image as input and outputs action) achieves competitive performance while using significantly less training frames compared to the SOTA on Skiing, one of the hardest Atari games for RL.

Read and Reward can process information from various sources (Wikipedia and official manual). In addition, Read and Reward only provides auxiliary reward for the existing RL agent and does not modify the agent architecture. It could be applied to other RL algorithms.